HITS

M&E Journal: The Serverless Revolution

Story Highlights

By Ian Hamilton, CTO, Signiant –

Ever since moving images were first used for entertainment in the silent film era, constant technical advances have created opportunities for enhancing the consumer experience—and for bringing efficiencies to the creation and distribution of content.

Starting with the basic image delivery mechanisms, television and film are technically differentiated by the fundamental ways in which they capture, transfer and reproduce moving images and sound. Of course, television delivers images and sound electronically, while film uses a physical medium and photochemical processes. But with the advent of videotape for storing television signals in the 1950’s, the difference between what could be accomplished with television and film technologies began to blur. Modern consumers tend to think they are synonymous, and associate both with brands like Netflix and YouTube.

The melding of information and media technologies

Similarly, the difference between information technology (IT) and media technology has blurred considerably. Media technology has historically been entirely purpose-built for media, originally based on analog electronics and mechanical components for the early media supply chains and shifting toward digital technology during the final chapters of the last century. Once Moore’s Law created sufficient processing power, bandwidth and storage to handle professional media, these purpose-built digital products gave way to ever-thinner layers of “media aware” functionality operating on standard IT platforms.

IT itself has gone through parallel changes. Software first ate hardware and now the cloud is eating software. Even the cloud is morphing from servers, storage and network infrastructure on-demand to serverless technologies. While the definition of “serverless” is being stretched by some vendors, the key central tenet is that code or logic is fully abstracted from the servers it executes on. This allows business logic to be reduced to a set of “when this happens do that” rules, and this is fundamentally changing how workflows are designed in the cloud era.

Impact on media workflows

So how will this serverless revolution impact media workflows? Is the specialized world of media now merged closely enough with IT that mission-critical media businesses can be operated with loosely coupled, event-driven cloud systems? Media workflows have evolved considerably, with the highly customized, tightly coupled systems of the early file-based years eventually giving way to flexible service orchestrations. Can media technology continue to evolve by taking the leap into this next generation of cloud services?

Just as a film workflow pipeline can be emulated with a digital workflow, file-based media processing workflows can simply be “lifted-and-shifted” into the cloud. That might work, but it won’t result in transformational change. The biggest benefit from a new technology often requires a completely new approach to the problem that aligns with the spirit of the new technology. And similarly, much of the disappointment associated with new technologies often comes from not being able to take full advantage of the fundamental essence of the change.

The basic “as a service” layers of the cloud are very familiar these days: IaaS provides compute, storage and network on demand; PaaS provides cloud middleware services like databases and transfer acceleration; and SaaS refers to cloud applications. All layers can be used to implement cloud workflows, with IaaS providing ultimate flexibility for techies, and SaaS providing turnkey business functionality. Generally speaking, the highest leverage is achieved with SaaS followed by PaaS and finally IaaS, where the higher layers abstract lower layer details.

SaaS offerings typically support application specific REST-based APIs that allow other systems to initiate application-specific behavior and to be notified when something meaningful happens in the application. These interactions are as simple to initiate as typing a URL in a browser (with appropriate security, of course). SaaS can be linked with other PaaS services through these same mechanisms to implement the “when this happens do that” chain of a workflow. The only centralized component of the workflow in the cloud then becomes a logically centralized view of what’s happening in the system based on receiving a stream of event notifications.

Let’s get concrete with an example of how to implement a simple media workflow. Using a serverless framework—such as AWS Lambda, Google Cloud Functions, or Microsoft Azure Web Jobs—developers can define a trigger and logic that executes when an event occurs. Using SaaS (for example Signiant Media Shuttle) that supports transferring media files into a customer’s cloud storage tenancy and delivering notifications when a new file is uploaded, it is possible to “catch events” at two levels. Storage events at the infrastructure-level inform when new content is created in storage (in an AWS S3 bucket, for example), and application-level delivery events provide more context about where the content came from (chain-of-custody information).

Creation in the cloud

Creation in the cloud

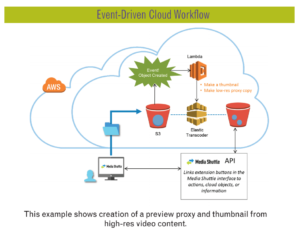

The diagram illustrates an example of an extremely simple event-driven cloud workflow of this nature; in this case the creation of a preview proxy and thumbnail from high-resolution video content uploaded to AWS S3 storage using Signiant’s Media Shuttle.

In such an event-driven cloud workflow, logic can then be defined to process the subsequent event messages—e.g. proxy “object created”—to initiate follow-on actions. For example, a follow-on action might include a lookup in a business system to determine what formats the media is needed in to complete a delivery, along with the time the media is required, and hence the priority.

The service could then insert a job in a transcoder queue that would then trigger another event when the transcode was complete, which in turn could trigger additional actions defined by logic operating on those event notifications. And so on along a linear or branching, logic-defined and event-driven, media process chain. None of these actions require any knowledge of the physical infrastructure the logic executes on in the cloud, or awareness of prior events. Of course, chain-of-custody and overall status can still be determined by logging and viewing these events.

This approach affords several advantages. The mapping of jobs to available hardware could consider variable costs of compute in a way that is completely abstracted from the logic that decides what transcode is required and by when. This logic could be implemented by a transcoding service that provides this level of abstraction as a service, or it could utilize transcode software provisioned directly on top of cloud compute.

In the latter case, it could manage the capacity of the transcode farm based on availability of spot instances at given price points, and vary the spot instance price point up to and including the on-demand price, based on the backlog of high priority transcodes.

All previous phases of file-based media workflows—from early “file islands”, to tightly-coupled bespoke systems, and on through the loosely-coupled on-premises solutions of more recent years—have all suffered from a common problem. No matter how elegantly “abstracted” the solution might be architecturally, the reality was that physically there was always a lot of infrastructure (hardware and software) that needed to be built, tested, deployed and supported. This took time and money, and frequently led to systems that did not lend themselves to expansion or major modification without another round of substantial investment of time and money.

Often it was simpler to scrap the previous build and just begin again as technology aged and changed, or as what was originally built no longer matched the business needs in terms of performance, cost, efficiency, reliability or functionality.

This long-established pattern of initiating large IT-driven media workflow projects only to see them become obsolete before they have even paid for themselves (or in some cases even worked as planned), has led many to have a justifiable skepticism of yet another new approach.

However, the marriage of event-driven workflows with the “Everything as a Service” offerings of the cloud perhaps finally offers a model for success—even for media, that most demanding of industries.

—

Click here to translate this article

Click here to download the complete .PDF version of this article

Click here to download the entire Spring 2017 M&E Journal